Understanding Checksum: Ensuring Data Integrity and Security

Introduction to Checksum

A checksum is a crucial tool in the realm of data management, serving as a calculated value generated from a specific set of data. This value is derived by applying mathematical operations to the content of the data, which results in a unique representation or signature of that content. The primary purpose of a checksum is to verify the integrity and accuracy of data during its transmission or storage. By comparing the calculated checksum value at different points in the data’s lifecycle, users can ascertain whether any alterations have occurred that might compromise its integrity.

Checksums are commonly used across various applications and protocols. For instance, when files are transferred over a network, a checksum can help ensure that the file received is identical to the original. If a discrepancy between the calculated checksum of the received data and the initial checksum arises, it indicates that the file may have been altered or corrupted, prompting a re-transmission of the data. Popular algorithms such as MD5, SHA-1, and SHA-256 are frequently employed to generate these checksums. Each algorithm has its own strengths and weaknesses concerning speed and security, with SHA-256 being favored for its robustness against potential vulnerabilities.

Fundamentally, the creation of a checksum involves taking an input data set—whether it be a file, message, or any form of information—and applying a series of mathematical functions. The output is a fixed-size string of characters that uniquely represents the data. It is important to note that while checksums are integral for identifying errors, they do not provide encryption or concealment for the data itself. Therefore, using checksums in combination with other security measures is essential for comprehensive data protection.

Practical Uses of Checksum

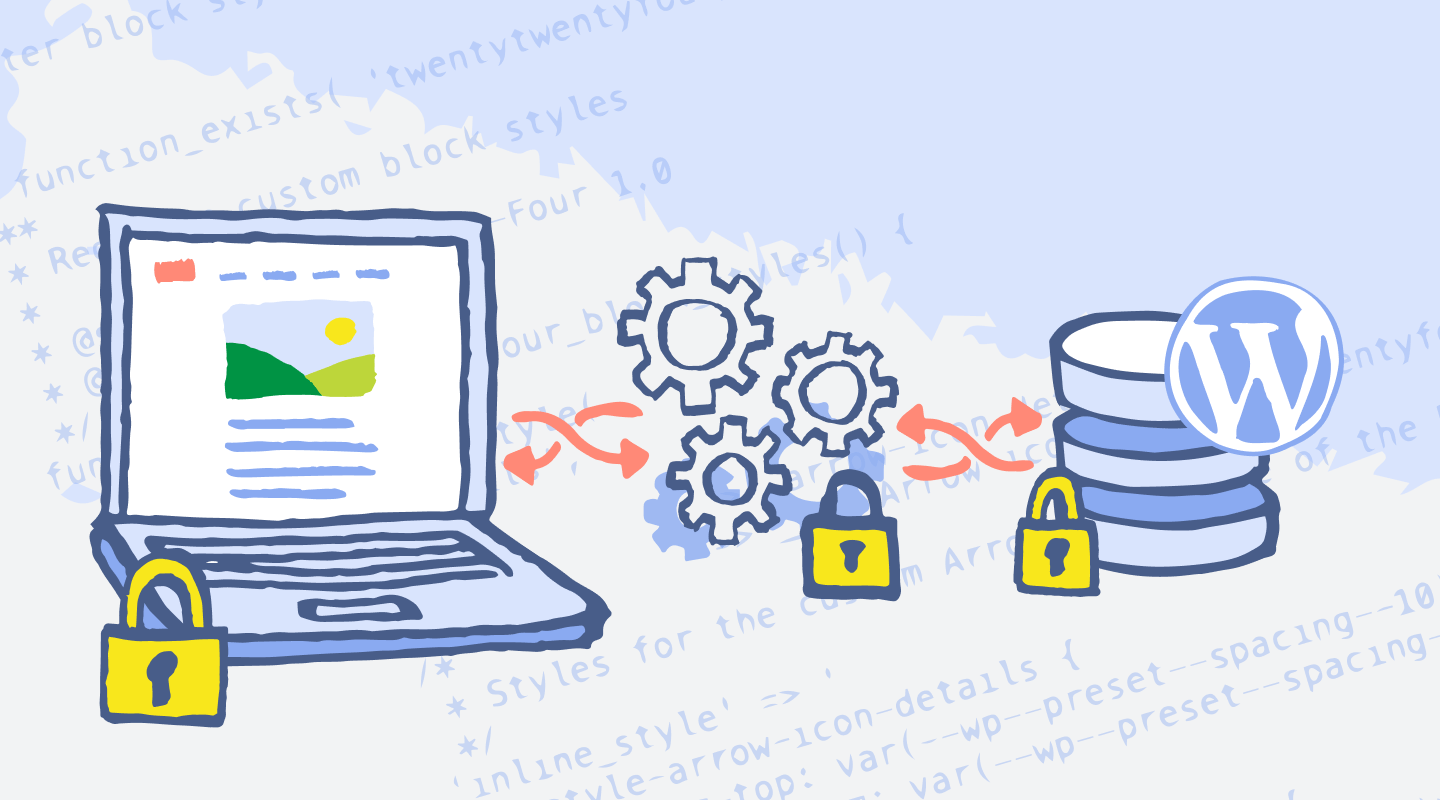

Checksums serve a critical role in ensuring data integrity across various applications and protocols. One of the most prominent uses of checksums occurs in software downloads. When users download files, especially software, the integrity of the file is paramount to prevent corruption or tampering. Developers often provide a checksum value alongside the download link. After the download completes, users can compute the checksum of the downloaded file and compare it to the original checksum. If both values match, the file is intact; if they differ, it indicates possible corruption during the download process or malicious alterations, thereby underscoring the importance of checksums in maintaining data integrity.

Moreover, checksums are extensively employed in data transfer protocols. When files are transmitted over networks, checksums verify that the files received match the originally sent files. Protocols such as FTP (File Transfer Protocol) and HTTP (Hypertext Transfer Protocol) often use checksums to detect errors introduced during transmission. By computing a checksum before and after the transfer, discrepancies can be easily identified, prompting a retransmission of corrupt files. This practice enhances the reliability of data transfer, thereby securing user data and maintaining consistency.

Another practical application of checksums lies in file archiving and version control systems. When archiving data, checksums ensure that files remain unchanged over time, protecting against unintentional modifications and data loss. In version control systems, such as Git, checksums are utilized to identify changes between file versions accurately. Every commit generates a unique checksum that reflects the content of that specific snapshot, allowing developers to keep track of alterations and revert as necessary without losing data integrity. Hence, the use of checksums is critical in various domains, providing a robust mechanism for achieving data reliability and security.

The Significance of Checksum in Data Integrity

In modern data management, maintaining data integrity is paramount. Checksum technology plays a crucial role in ensuring that data remains accurate and unaltered during storage and transmission. A checksum is a calculated value that is derived from a data set, which is used to verify the integrity of that data. By employing checksums, organizations can detect errors that may arise from various issues, such as unintentional data modifications, intentional tampering, or corruption caused by hardware failures.

Checksums operate by generating a unique hash value for a specific dataset. This value acts as a fingerprint for the information, allowing systems to perform comparisons at different stages. If the checksum value changes, it is indicative of some alteration in the data, prompting further investigation. The application of checksums is not confined to a specific context; they are essential in both data storage solutions and communication protocols, thereby serving a broad spectrum of use cases.

Neglecting checksum validation can have severe consequences. There have been numerous case studies demonstrating the repercussions of data integrity breaches. For instance, an organization that failed to implement robust checksum technology on its database suffered significant data loss due to an undetected hardware malfunction. In another instance, financial institutions that did not apply checksum checks to their transaction logs fell victim to fraudulent activities, leading to substantial economic setbacks. These cases underline the importance of implementing checksum checks as a preventive measure, to safeguard against potential data loss and security breaches.

Furthermore, the economic and operational impacts of maintaining data integrity through checksums cannot be overlooked. Organizations that prioritize checksum verification tend to experience fewer incidents of data corruption, thereby reducing operational disruptions and the associated costs of recovery. In this way, implementing checksums not only fortifies data safety but also enhances an organization’s overall efficiency and reliability.

Common Types of Checksum Algorithms

Checksum algorithms play a fundamental role in ensuring data integrity by verifying the accuracy of data retention and transfer. Several algorithms serve this purpose, each with unique attributes, strengths, and weaknesses. Among the most commonly used checksum algorithms are CRC (Cyclic Redundancy Check), MD5 (Message-Digest Algorithm 5), SHA-1 (Secure Hash Algorithm 1), and SHA-256 (Secure Hash Algorithm 256).

CRC is a widely employed algorithm primarily used in network communications and data storage. It operates by creating a fixed-size checksum based on the binary data, allowing for the detection of accidental changes. Its computational efficiency makes it suitable for real-time applications, although it’s not foolproof against deliberate tampering.

MD5, although once a popular choice for checksumming, has notable vulnerabilities that compromise its reliability. It produces a 128-bit hash value and is often used to verify the integrity of files. However, due to its susceptibility to collision attacks, where two different inputs produce the same hash, it is discouraged for security-focused applications.

SHA-1 was developed to improve upon MD5’s security but has also faced challenges regarding its robustness. This algorithm generates a 160-bit hash and is still utilized in some legacy systems. Nonetheless, the security community generally recommends against its use for critical applications due to identified vulnerabilities.

Conversely, SHA-256, part of the SHA-2 family, is widely recognized for its strong security and is employed in various applications, including SSL certificates and cryptocurrency. It produces a 256-bit hash, making it far more secure against collision attacks compared to its predecessors. It is recommended for applications where data integrity and security are paramount.

The choice of checksum algorithm is crucial and should align with the specific requirements of the application. As security threats evolve, selecting the appropriate algorithm can significantly enhance data integrity and safeguard against potential data breaches or errors.